Continually improving the customer experience and satisfaction with service support is a goal of every service desk. While there are many metrics that influence measures of customer satisfaction, the four service desk agent performance metrics discussed here are among those that have a great impact and should be monitored in real-time, as well as trends occurring over a period of time.

1. Forecast Accuracy

Forecast accuracy refers to forecasting the number of calls that will be received for a given period of time. Clearly, if the forecast anticipates 1,000 calls during a certain period but the service desk actually receives 1,500, customers will not be served as they should. Too few agents to handle the call volume will lead to increased wait times. Average Speed to Answer and Abandoned Call Rate will both climb into unacceptable territory. Cost per Contact will increase due to long wait times on toll-free lines. Agent Utilization will surge to undesirable levels as agents struggle to keep up with the call volume.

On the other hand, if only 500 calls are received during the time when 1,000 were forecast, Agent Utilization will plummet, and Cost per Contact will skyrocket. While customers should experience excellent service during such a period, it will be at the expense of the service desk due to over-staffing.

As such, forecast accuracy is a metric that affects both cost of operation and the customer experience. Calculating the forecast accuracy for a service desk can be done in many ways, ranging from simple to more complex approaches that use standard deviation and correlation coefficients. Here are two ways that are both straightforward and can yield useful information.

Forecast accuracy is a ratio can be expressed as a percentage using this formula.

![]()

If the service desk receives a total of 1,000 calls and had forecast only 947 calls:

![]()

Note that “Total Calls” refers to the total calls made to the service desk, not the number of calls answered. When a customer calls, abandons the call, then calls again, using Total Calls may slightly overstate the actual volume. However, this is a better approach than counting only the calls that were answered—which can potentially understate the actual call volume substantially.

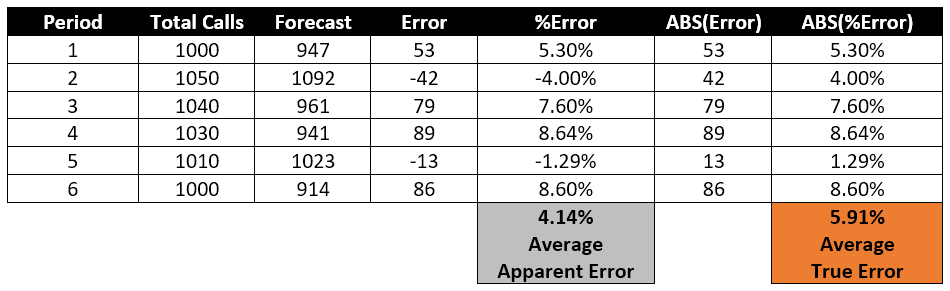

A similar approach can be used when evaluating forecast accuracy for a service desk over several periods of time. For example, assume there are six periods of time—they could be days, weeks, months or another period. The table shows the total calls received during each period and the forecast that was made for each. The “Error” column shows the over- or under-forecasting as a difference between Total Calls and the Forecast. The “% Error” column shows that same metric as a percentage, with an “Average Apparent Error” of 4.14% over the entire period being monitored (highlighted in grey).

This is an apparent error because the positive and negative errors and percentages cancel one another out to some degree.

A more accurate measure of forecast accuracy uses the absolute value of each error. Therefore, the ABS(Error) column shows those absolute values which do not forgive forecasting errors, whether positive or negative. Similarly, the “ABS(% Error)” column shows that error rate as a percentage. The “Average True Error,” highlighted in orange, provides a more accurate measure of forecast accuracy. Most industry experts suggest that forecasting accuracy should remain at five percent or less.

2. First Contact Resolution(FCR)

All forms of contact will need to be considered in this metric and it has a powerful effect upon customer satisfaction. Imagine dialing into a service desk over a period of a few weeks only to find that the agent who greets you is unable to resolve your incident on three of five different calls. Many callers would perceive the service desk as being staffed with untrained agents, and that perception of the service desk would extend to the caller’s perception of the company as well. “What’s wrong with XYZ company? I have to talk to two or three different people just to get an answer.”

The most useful formula for FCR compares the number of contacts handled successfully by the first tier support agents divided by the number of contacts received. Note that “Total Contacts Received” needs to be adjusted to remove those contacts that, by policy, cannot be handled by first tier agents. For instance, a customer calling to replace a failed hard drive must be referred to the team that goes on site and deals with hardware issues, which is usually not the first tier of agents.

![]()

3. Average Handle Time(AHT)

Also known as Contact Handle Time, this metric measures the average time a service desk agent spends in resolving a customer incident. That includes the time speaking (or chatting online) with the customer, any time spent on hold after the call has begun, the time spent wrapping up the call and any after-call work required to fully resolve the incident. After-call work might include updating the ticketing or CRM system, for instance. The sum of these, divided by the number of calls handled.

![]()

Not all service desk managers include On-Hold Time in this metric, which is a mistake. Not including the On-Hold Time can under-state AHT when service desk agents place callers on hold to either “take a break” from the call, or to shorten their AHT metric.

Where agents have been suitably trained to handle the calls they receive, Average Handling Time reflects the complexity of incidents the service desk handles. An agent resolving a complex international banking issue, for instance, is likely to have longer average handle times than an agent answering basic questions about opening a consumer bank account.

Average handle time can have a profound effect on the customer experience if management focuses too strongly on AHT. Agents, feeling under pressure to resolve every call as quickly as possible, may rush to complete each call or unnecessarily escalate it to the second tier of agents. In either case, agents do not resolve incidents to the satisfaction of the caller, which can impact customer satisfaction.

4. Service Desk Efficiency

If you define efficiency as the work input that achieves a particular output, the simplest measure of efficiency requires only two metrics.

The first, relates to the cost of operation, which measures the work input. You may use a number of metrics that relate to cost, but perhaps the simplest to use is the Cost per Contact metric described elsewhere.

The second metric measures the output, and for this the Customer Satisfaction metric serves well.

Tracking these two metrics over time gives you a reasonable measure of efficiency, and there’s no question that an efficient service desk influences the customer experience.

Actively Monitor Your Operation

The best way to monitor these four service desk agent performance metrics that influence the customer experience requires a tool that provides real-time dashboards with trend analysis and automated reporting capabilities. It should consolidate data from multiple service support centers and multiple technology sources from any vendor. When you can bring all the relevant data from those systems to a single portal, you’ll finally have the actionable performance metrics for which you can make informed business decisions and optimize your operations.